Hadoop Architecture

The Big Data Hadoop Architect Master's Program offers a structured learning path designed to transform you into a qualified Hadoop Architect. This data architect certification will give you an in-depth education of the Hadoop development framework, including real-time processing using Spark and NoSQL database technology and other Big Data technologies such as Storm, Kafka and Impala.

- Introduction to Data Storage and Processing

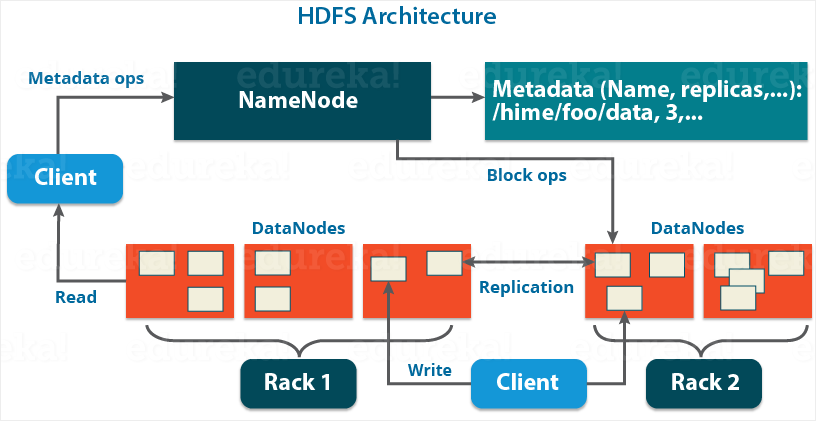

- Installing the Hadoop Distributed File System (HDFS)

- Defining key design assumptions and architecture

- Configuring and setting up the file system

- Issuing commands from the console

- Reading and writing files

- Setting the stage for MapReduce

- Reviewing the MapReduce approach

- Introducing the computing daemons

- Dissecting a MapReduce job

- Defining Hadoop Cluster Requirements

- Planning the architecture

- Selecting appropriate hardware

- Designing a scalable cluster

- Building the cluster

- Installing Hadoop daemons

- Optimizing the network architecture

- Configuring a Cluster

- Preparing HDFS

- Setting basic configuration parameters

- Configuring block allocation, redundancy and replication

- Deploying MapReduce

- Installing and setting up the MapReduce environment

- Delivering redundant load balancing via Rack Awareness

- Maximizing HDFS Robustness

- Creating a fault-tolerant file system

- Isolating single points of failure

- Maintaining High Availability

- Triggering manual failover

- Automating failover with Zookeeper

- Leveraging NameNode Federation

- Extending HDFS resources

- Managing the namespace volumes

- Introducing YARN

- Critiquing the YARN architecture

- Identifying the new daemons

- Managing Resources and Cluster Health

- Allocating resources

- Setting quotas to constrain HDFS utilization

- Prioritizing access to MapReduce using schedulers

- Maintaining HDFS

- Starting and stopping Hadoop daemons

- Monitoring HDFS status

- Adding and removing data nodes

- Administering MapReduce

- Managing MapReduce jobs

- Tracking progress with monitoring tools

- Commissioning and decommissioning compute nodes

- Extending Hadoop

- Simplifying information access

- Enabling SQL-like querying with Hive

- Installing Pig to create MapReduce jobs

- Integrating additional elements of the ecosystem

- Imposing a tabular view on HDFS with HBase

- Configuring Oozie to schedule workflows

- Implementing Data Ingress and Egress

- Facilitating generic input/output

- Moving bulk data into and out of Hadoop

- Transmitting HDFS data over HTTP with WebHDFS

- Acquiring application-specific data

- Collecting multi-sourced log files with Flume

- Importing and exporting relational information with Sqoop

Our distinct services include :

- Material and Documents: We provide real time working documents and materials to our students.

- Free System Access : We have the latest and most up to date platforms deployed on high-end servers that are available remotely and in-class on a 24/7 basis.

- Interview Preparation: We will prepare you for interviews in your specialized area by providing some real time interview questions. We also conduct mock interviews and assist you in preparing a professional resume.

- Placement Assistance: Our placement service division provides job placement support by submitting your resumes to prospective clients and emails you any new job opportunities in the market. However, we do not provide false guarantees on securing any jobs for you. We have a history of several ex-students who have secured excellent jobs based on our WORLD class training approach, implementation of case study projects and post training support.

- Initial Job Stabilization Support: We support our students to get stabilized in their job in the first TWO months. If our students come across any issues, we are there to help them. Students can send an email to us with their issues, our experts will reply back with possible solutions within 24 hours.

- Free Class Room sessions recording: In addition to the e-class training, we will give you authorization to record all of your e-class room training sessions, so that you can go back and review.

Other Courses related to HADOOP

Course registration

Key Points of Training Program :

-

How beneficial is the Hadoop Course and Certification?

Dice says that, ``Technology professionals should be volunteering for Big Data projects, which make them more valuable to their current employer and more marketable to other employees``.

Key advantages are:

All top companies around the globe use Hadoop technology.

Therefore, there are a myriad of exciting job opportunities.

Owing to its importance and value, lump salary packages are offered.

Fast career growth is a guarantee. -

Who should attend Hadoop Course?

Hadoop technology training is mainly best suited for software engineers with ETL/programming background. It is also applicable for managers who are dealing with latest technologies and data management. The .NET developers and data analysts who are involved in developing applications and performing data analysis using the Hortonworks Data platform for Windows will also find this course extremely beneficial for their career advancement.

-

Prerequisites

Hands-on experience in Core Java.

Good analytical skills to grasp and apply concepts.

Programming experience with Visual studio and SQL.

Familiarity with Windows Server OS.